PyTorch and Android

Recently, a client and I discussed the use of PyTorch on Mobile / IoT-like devices. Naturally, the Caffe2 Android tutorial was a starting point. Getting it to work with Caffe2 from PyTorch and recent Android wasn't trivial, though. Apparently, other people have not had much luck, I easily got a dozen questions about it on the first day after mentioning it in a discussion.

Here is how to build the demo and also some thoughts about getting the more pytorchy libtorch working on android.

Any PyTorch model on Android

Have you ever felt that it was too hard to get your model on Android? The promise of PyTorch's JIT and C++ interface is that you can very easily get your model into C++. Wouldn't it be neat if you could do the same for your Android device?

Recently, FAIR open-sourced a benchmark implementation of MaskRCNN. So here is a challenge we put ourselves: Can we make one of the pretrained models run on Android? This took two steps:

- Trace the model using the awesome PyTorch JIT, which we gladly contribute (and in the course we also took the opportunity to implement a couple of improvements to the JIT itself),

- have PyTorch on android. This will take a bit of effort yet, and you can help to make it happen - see below.

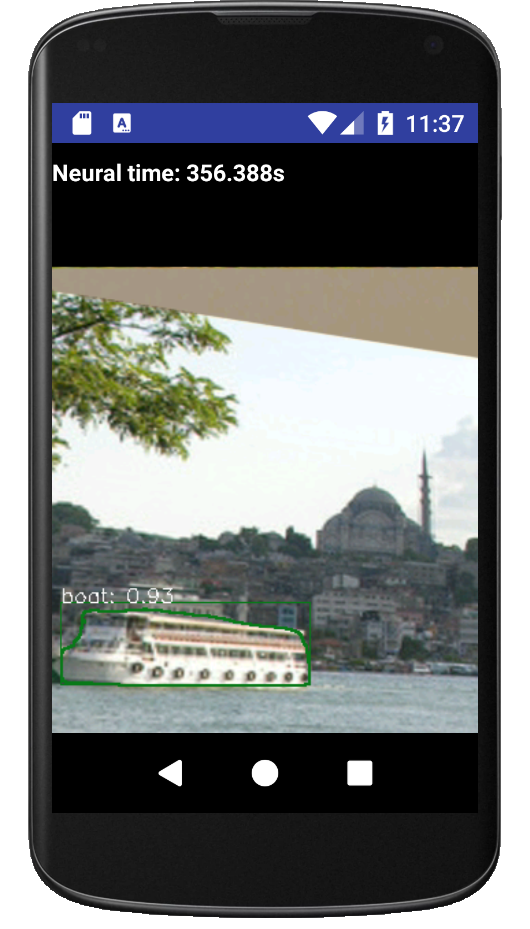

This is how the pretrained MaskRCNN looks like on Android:

From PyTorch to Android

PyTorch is my favorite AI framework and I'm not surprised that you like it, too. Recently, PyTorch gained support for using it directly from C++ and deploying models there. Peter, who did much of the work, wrote a great tutorial about it.

The Android story for PyTorch seems a bit more muddy: You can use ONNX to get from PyTorch to Caffe2. There also is an old demo showing live classification on mobile phones with Caffe2, but that had caught quite a bit of dust.

I believe that the C++ tutorial shows how things should work: you have ATen and torch in C++ - they feel just like PyTorch on Python - load your model and are ready to go! No cumbersome ONNX, no learning Caffe2 enough to integrate it to your mobile app. Just PyTorch and libtorch!

I'll show you exactly how I dusted off the Caffe2 AICamera and got the first path working. After that I will tell you how, with your help, I would like to make the elegant libtorch solution happen.

Awesome AI apps with c++ libtorch on Android

Do you ever see (or create) a cool neural network application and think that would be awesome on mobile?

Now, you if you just could do

with torch.no_grad():

traced_script_module = torch.jit.trace(model, img)

traced_script_module.save("mymodel.pt")

and then on Android

module = torch::jit::load(stream);

and later

static at::Tensor transform_tensor(at::Tensor& input) {

at::Tensor output;

if (module != nullptr) {

std::vector<torch::jit::IValue> inputs;

inputs.push_back(input);

output = module->forward(inputs).toTensor();

}

return output;

}

and you're done!

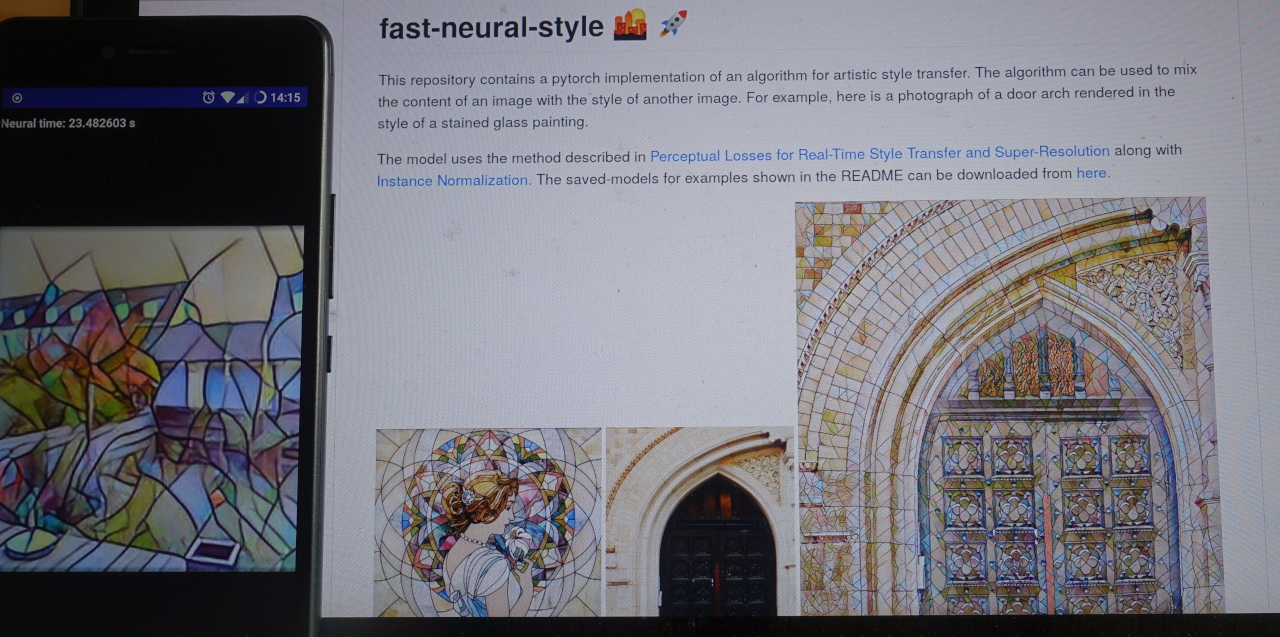

Above is the original net from PyTorch's neural style transfer example running in 23 seconds on a low-end mobile phone. Not quite live like Facebook's Prisma, but I'd venture that they have better hardware and mobile-adapted neural nets.

Updating the AICamera demo to work with Caffe2 from PyTorch/master

But we're not there yet, so here is what you can do to get Caffe2 to run with PyTorch/master.

First, I built the android libs of Caffe2. Here we are off to a good start, because Caffe2 provides a script scripts/build_android.sh that puts you on the right track. A few tweaks were necessary, though: Recent android seems to have moved from gcc to clang as the compiler of choice, so I changed the toolchain (that was done easily enough, as there is a switch in the build script). You would also want to get an x86 version of the toolchain for debugging. Just changing the architecture was not enough, though: I also had to disable building the accelerated AVX kernels - those won't be working on mobile anyway. So I added a switch to them and built x86 libraries. I published these changes and will turn the discussion bug report into a submission.

Next, I started trying to get the AICamera to compile in a recent Android studio. The Android toolchain infrastructure is large and complex, and you have to upgrade/downgrade versions in gradle forward and backward quite a bit (I seem to have picked a date with some major Android upgrades, there were quite a few packages that could not be found on the package repositories). In an eerie reminiscence of Tensorflow's graph abstraction, you also have to rebuild ("sync") your gradle build system whenever you change the configuration - maybe that tool needs an "eager mode", too, ha!

In order to deal with the new set of libraries that the Caffe2 build produces, the CMakeLists.txt needed to be updated after the caffe2 libraries and headers have been copied over.

The smallest part were some code changes needed in the native lib on android to follow the Caffe2 updates. With the new x86 android packages I built, debugging worked well.

My version of the AICamera is available. I put the instructions to build your own version there, too.

The prediction results seem to be so-so, but I guess that is a question of the model used, not the camera.

How to get libtorch on Android

So while having the Caffe2 tutorial from PyTorch work with master is great, I think it would be even better if we got the easy way to work with C++ libtorch on Android. As you can see above it would make transfer to Android almost trivial and allow you to put out high quality AI enabled apps more easily. As far as I can see, no-one is immediately planning to work on it, though.

Crowdfunding

In the first half of November, I proposed to do this as a B2B-Crowdfunded project and get a first version out by Christmas. It turned out there has not been enough countable (in EUR) interest yet. It will be interesting to see if there are other ways to get there. If you have ideas, please do drop me a mail!

If you're curious about the proof of concept style transfer, here is an implementation of neustyle that uses Pytorch, nnpack, and an adaptation of the PyTorch style transfer example using 32 channels maximum, 6 residual blocks and reducing 32->8->4 channels in the expansion (so the architecture matches the TF mobile style transfer example, but without the quantization). This get's the time down to ~2.5 seconds on my (slow) mobile phone.